Updated: January 28, 2026- 14 min read

Organizations everywhere are racing to infuse AI into products and processes to become truly AI-powered organizations. What we’re seeing is a paradigm shift in how businesses operate, make decisions, and deliver value.

The pace of change is dizzying.

The pace of competition, the firehose of information, the speed of decision-making… It has all exponentially increased. And quite frankly, the old ways of working aren’t just inefficient, they are a total recipe for burnout.

— Dave Killeen, Field VP of Product (EMEA) at Pendo, in a recent ProductCon talk.

In other words, simply layering AI on top of old workflows and ways of thinking won’t cut it. Companies need to rethink their operating models to survive and thrive in an AI-driven era. Here’s how to do it right.

Level up on your AI knowledge

Based on insights from top Product Leaders from companies like Google, Grammarly, and Shopify, this guide ensures seamless AI adoption for sustainable growth.

Download Guide

Embracing the AI Paradigm Shift in Business Operations

Becoming an AI-powered organization isn’t about shipping a few machine learning features. It’s about changing how the company runs day to day.

In the old model, AI helped teams do the same work a bit faster. In the new model, AI changes how decisions get made, how execution happens, and what “good” looks like.

The product-led organizations pulling ahead are treating AI as an operating layer. They’re redesigning workflows, roles, and governance so AI can create repeatable value across the business, not just in a handful of pilots.

AI is shifting from tool to teammate

AI used to be a productivity add-on. You know, summarize notes, draft copy, speed up analysis. Now it’s moving into creation, ideation, and decision support.

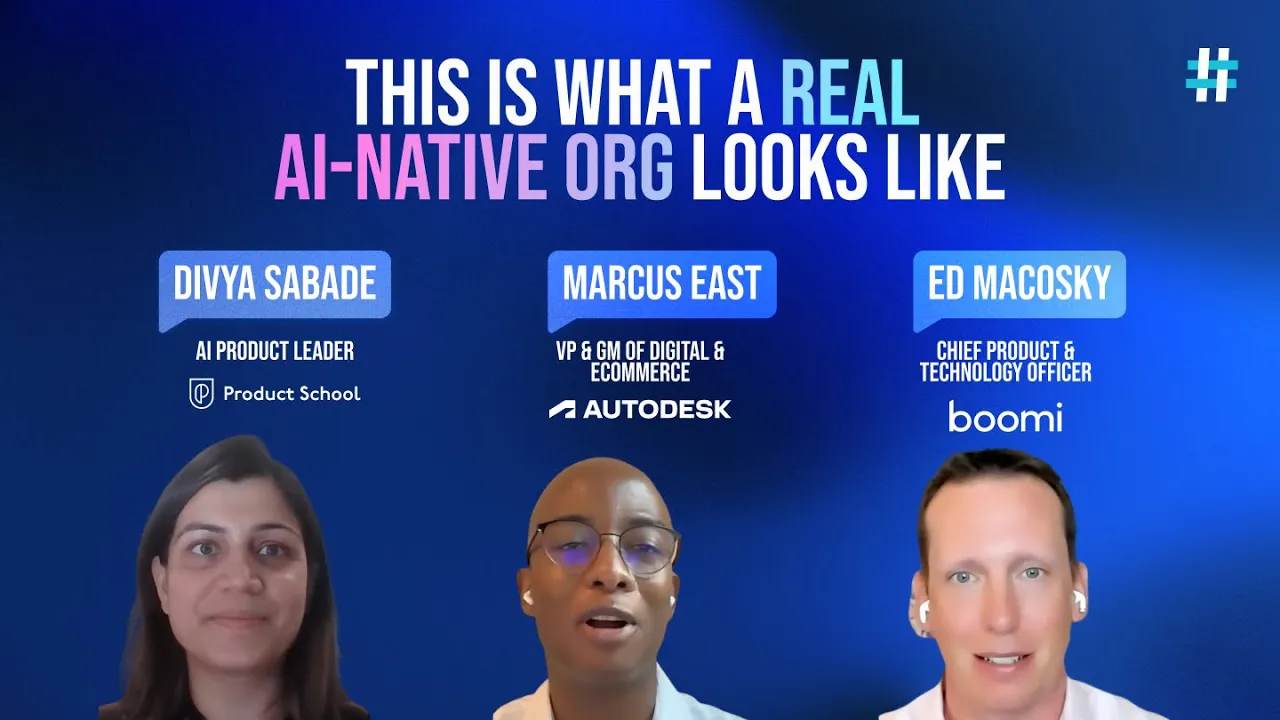

For me, what’s really exciting now is moving away from the mindset that AI is helping me do repetitive tasks, to a space where AI is actually starting to help with some of the generation and even the ideation and creation for our customers.

— Marcus East, VP & GM, Digital & E-commerce at Autodesk, at ProductCon AI 2025.

That’s the shift product leadership needs to internalize. If AI can help generate outputs, not just optimize inputs, your operating model has to evolve to support it.

The AI team structure is changing with it

As AI spreads, clean functional handoffs start to break. Product teams, engineering, design, data, marketing, sales; everyone gets pulled into the same loop.

The PM role is changing… the expectations on our teams and us have really leveled up in this new world where AI is permeating how we build technology. It almost feels like functions that used to be discrete are now overlapping more and more — product, engineering, design, even sales and marketing.

— Aman Khan at ProductCon AI 2025.

This is why the AI team structure can’t be an afterthought. An AI organization needs tighter cross-functional collaboration, clearer ownership, and shared standards. Otherwise, AI work turns into chaos disguised as product innovation or product experimentation.

Building an AI-Powered Organization: Key Elements of the Operating Model

How can an enterprise effectively implement an AI operating model and become an AI-powered organization?

It starts with a strategic, holistic approach. In practice, designing this operating model means focusing on several key elements of the business:

1. Strategic alignment: tie AI to a business loop, not a demo

Most “AI product strategies” fail for a boring reason. They’re organized around capabilities (“we need an assistant”) instead of outcomes (“we need to cut time-to-value” or “we need to increase activation”). It’s time to focus on outcomes, not outputs.

In an AI organization, alignment means picking a business loop you want to improve, then designing the AI intervention that reliably moves that metric.

Start with one of these alignment anchors:

A customer loop (acquisition → activation → retention), where AI removes friction or increases perceived value

An internal execution loop (idea → build → ship → learn), where AI compresses cycle time without degrading quality

A risk loop (policy → enforcement → audit), where AI increases speed but stays governable

If you can’t name the loop, the owner, and the metric in one breath, you don’t have alignment. You have a prototype.

2. Data foundation: treat context as a product, not a spreadsheet

In an AI-powered organization, “data” isn’t just tables. It’s the full context layer your models and agents pull from: documents, tickets, call transcripts, policies, product telemetry, and whatever lives in SaaS tools.

The practical shift is this. You’re no longer only managing data pipelines. You’re managing context quality, access, and freshness because that’s what determines whether AI outputs are useful, safe, and repeatable.

A high-functioning AI organization typically standardizes a few things early:

Data ownership per domain (who owns customer, pricing, support, identity)

Data contracts and quality checks (what “good” looks like, what breaks the build)

A retrieval layer for unstructured knowledge (search, permissions, versioning, source-of-truth rules)

Feedback capture (what users accepted, edited, ignored, or escalated) so the system can improve

If your AI team structure can’t answer “what’s the source of truth?” and “who approves changes?” for core knowledge, your AI won’t scale. It will drift.

3. Talent and AI team structure: build a mesh, not a lab

Most companies start AI with a “lab” mindset: hire a few specialists, spin up a central team, ship prototypes. That’s fine for learning, but it’s a dead end for becoming an AI-powered organization.

At scale, the winning pattern looks more like a mesh. You keep a small central group to set standards and platforms, then you embed AI capability into product and domain teams where the decisions actually happen.

A practical AI product team structure usually includes:

A central enablement group (architecture, MLOps, governance, shared components)

Domain AI squads embedded in product areas (PM + engineering + data/ML + design)

Clear model ownership (one accountable owner per model and per major prompt/agent system)

An internal “translation” layer (people who can convert business problems into evaluable AI work)

If your AI organization treats AI as “the data team’s job,” you’ll get scattered pilots and fragile systems. If you treat AI as a core product capability, you get repeatable execution.

4. Technology and platforms: move from “model building” to “capability shipping.”

In a mature AI organization, the competitive edge isn’t having a slightly better model. It’s having a platform that can ship AI capabilities safely and repeatedly, across multiple teams, without creating a reliability nightmare.

That means designing for the full lifecycle. Teams need a consistent way to handle data access, retrieval, orchestration, AI evaluation, monitoring, and rollback. They also need shared building blocks so every product squad isn’t reinventing prompts, tools, and guardrails from scratch.

The real shift is organizational. Platform decisions become product decisions: what capabilities can product teams reuse, what standards are mandatory, and what trade-offs you’re willing to accept for speed. If you don’t build this layer, scaling AI will feel like scaling chaos.

5. Governance and risk: make AI auditable before you make it scalable

In an AI-powered organization, governance is an operating constraint you design into the system from day one. The moment AI touches decisions, customers, or money, “vibes-based” shipping stops working.

The practical goal is simple. Every meaningful AI output should be traceable. You need to know what data or documents were used, what version of the model/prompt/agent ran, what rules were applied, and what a human can do when it fails.

This is also where the AI team structure matters. Without clear ownership for models, prompts, retrieval sources, and evaluation thresholds, responsibility gets diluted fast. Then the first incident turns into a blame scavenger hunt instead of a controlled rollback.

Scaling AI Across the Enterprise

Most companies don’t fail at AI because they can’t build a model or an AI agent. They fail because they can’t turn a good pilot into a repeatable system that multiple teams can ship, trust, and improve.

That’s the difference between “we did something cool with AI” and an AI-powered organization. Scaling requires an operating model that makes AI work boring (in the best way).

Why AI prototypes can stall in an AI organization

AI prototyping is necessary. In fact, they are immensely important to learn for both AI PMs and product-led organizations. They’re how teams learn fast, prove value, and de-risk the unknowns. But a prototype can look “successful” because it lives in a controlled setup, and it breaks the moment it hits enterprise reality: messy data, shared ownership, compliance, and real users.

Pilots usually live in a protected bubble. They have a hand-picked dataset, a heroic team, and a forgiving environment.

Enterprise scale is the opposite: messy data, many stakeholders, shifting priorities, and real risk. If your product team structure and operating model aren’t designed for that reality, the pilot dies the moment it meets production constraints.

AI Prototyping Certification

Go from idea to prototype in minutes. Build, debug, and scale AI prototypes with the latest tools to integrate APIs securely and hand off to engineering fast.

Enroll now

Scale the entire pipeline, not just code generation

The quickest path to “AI everywhere” is to stop thinking about AI as a developer tool and start treating it as a delivery system.

Ed Makoski, Chief Product and Technology Officer at Boomi, put it bluntly at ProductCon AI 2025 Panel Session:

You have to look at the entire delivery pipeline if you want real speed. It’s not enough to use AI just for code generation. For example, we as an organization introduced agentic AI at each stage of the pipeline so we can deliver products faster.

That’s a scaling mindset. You don’t sprinkle AI into one step and call it an AI digital transformation. You redesign how work moves from idea to outcome.

Standardize the platform so teams can ship safely

At scale, the bottleneck isn’t model access. It’s consistency.

If every team uses different prompts, different data sources, and different AI evaluation rules, you don’t get “many AI use cases.” You get many versions of truth. That’s how an AI organization ends up with regressions that only show up in production, governance that slows everything down, and no shared learning across teams.

A shared platform layer fixes this by standardizing the basics (data access, retrieval, evals, monitoring, and deployment), so the safest approach is also the fastest.

AI Evals Certification

Learn to build trusted AI products. Design eval suites, integrate CI/CD gates, monitor drift and bias, and lead responsible AI adoption.

Enroll now

Make adoption measurable, or it stays a side project

If you can’t measure AI adoption in real workflows, you’ll keep debating AI value in abstract terms.

The simplest scaling move is to pick a few operational evaluation metrics that signal behavior change, not hype. Think: how often AI outputs are accepted, where humans override, how much cycle time is compressed, and what failure modes are recurring.

That’s how an AI-powered organization learns. Not through one big product launch, but through instrumented iteration that compounds.

Challenges and Barriers on the AI-First Journey

Transforming into an AI-first enterprise is a complex journey, and it doesn’t happen overnight. Organizations will encounter hurdles that need to be proactively managed.

Prototype purgatory

Prototypes are necessary, but they only prove that something can work. If you don’t turn them into owned, monitored systems, they never scale.The “who owns this?” gap

When no one owns the model, the prompt, the data source, and the metric, issues bounce between teams. Speed dies in meetings.Data mirage

Pilot data is clean. Production data is messy, inconsistent, and permissioned, so outputs degrade fast without a real data foundation.Frankenstack reality

Enterprise AI has to live inside legacy systems, brittle integrations, and security constraints. Scaling fails when the platform can’t handle real constraints.Metrics that don’t mean anything

“accuracy” without context is a trap. You need evals tied to outcomes and failure cost, or teams optimize the wrong thing.Governance as a speed bump

If risk shows up at the end, launch stalls. Build auditability and controls into the workflow so governance enables shipping.Trust debt

One bad incident can poison adoption for months. Guardrails, transparency, and clear human fallback paths keep trust intact.Drift everywhere

Models drift, but so do context, policies, and user behavior. Without monitoring and refresh cycles, quality erodes quietly.Tool sprawl

Different teams using different tools, prompts, and standards lead to fragile systems and surprise regressions. Standardize the basics so teams can move fast, safely.Translation failure

You don’t just need ML talent. You need people who can convert business problems into evaluable AI work, or you get clever demos instead of outcomes.

5 Steps to Thrive as an AI Organization

Most teams don’t struggle with “what AI can do.” They struggle with making AI reliable, repeatable, and scalable across real workflows, real constraints, and real stakeholders.

These five steps are the operating model moves that separate an AI-powered organization from a pile of pilots. They’re written for product leaders who need a clear playbook, not another generic “embrace innovation” speech.

1. Start with a value loop and run it like a product portfolio

An AI organization wins when AI improves a business loop, not when it demos a clever capability. Pick 2–3 loops where speed and quality matter, then treat them like a portfolio with owners, key product metrics, and a kill switch.

The move that separates an AI-powered organization from “random experiments” is forcing clarity early: What workflow changes, what metric moves, and who owns the outcome. This is also where focus on tying product adoption to outcomes (and tracking leading indicators like time-to-value and experimentation velocity) becomes your operating rhythm.

2. Build a shared platform so teams can ship AI the same way

At enterprise scale, “we have access to a model” is table stakes. The real advantage is a shared layer that standardizes how AI is built, evaluated, deployed, and monitored, so teams do not reinvent (or break) the basics.

High-performing product teams increasingly treat gen AI as a set of swappable components rather than a one-time stack decision. They actively coordinate rollouts so it does not become a pile of disjointed pilots.

If you want this to feel real in practice, pair it with AI prototyping skills. And remember, prototypes are your fast learning engine, but the platform is what turns those learnings into repeatable shipping.

3. Design your ai team structure as a hub-and-spoke system

The most scalable AI team structure is usually hybrid. A small central group sets standards and provides the platform, while domain teams own real use cases and delivery. This avoids the two classic failure modes: a slow central bottleneck or chaotic decentralization.

What tends to stay more centralized is risk/compliance and data governance, while talent and adoption often move toward hybrid models as organizations mature.

This is exactly why role-based upskilling and reskilling matter. Your “spokes” need AI product fluency.

4. Operationalize trust with evals, monitoring, and human review paths

In an AI-powered organization, reliability is a product requirement, not a late-stage checkbox. You need evaluation pipelines that match non-deterministic behavior, plus monitoring that catches drift and regressions before users do.

A practical way to keep this crisp is to define, per workflow:

What “good” means

What failures cost

What gets reviewed by humans

What gets blocked outright

Organizations vary widely in how much gen AI output they review before use, which is a signal that most teams still have not standardized trust mechanisms at scale. This is where AI evals become a core operating capability, not a niche specialty.

5. Graduate from features to agentic workflows, but keep them governable

The frontier is not “add an assistant.” It’s designing agentic workflows that can execute meaningful work across tools, with clear boundaries, permissions, and fallback plans. That requires product thinking plus system design: routing, reflection, memory, retrieval (RAG), and multi-agent orchestration.

The organizations moving fastest treat agents as an operating model shift. They see humans and AI agents working side by side across increasingly complex workflows.

If you want teams to thrive here, train for execution, not theory: write AI-specific PRDs, design AI-native user flows, prototype quickly, then lock in trust with evals and governance so the AI organization can scale without drama.

Where Your AI Organization Goes Next

Right now, the winners are not the teams with the fanciest model. They’re the teams with an operating model that can ship AI capabilities weekly, measure them honestly, and improve them without breaking trust.

The next phase will move even faster.

Agents will take on more real work, workflows will compress, and the gap between “we tried AI” and “we run on AI” will widen exponentially. If your organization builds the platform, the team structure, and the governance to scale, you won’t be chasing the future. You’ll be setting the pace.

Transform Your Team With AI Training That Delivers ROI

Product School's AI training empowers product teams to adopt AI at scale and deliver ROI.

Learn more

Updated: January 28, 2026